Sometimes... I wonder if I'm going to be replaced by AI.

I learned chip design at U.C. Berkeley in the 1990s, a time when chips were put together using “gate-level design” (AND, OR, etc). I was a hobbyist and a nerd, and I’d been playing with batteries and bulbs since before I could remember. For at least six years before college, I’d been building electronics designs from the ubiquitous “74xx” chips, available at any reputable electronics store. At university, of course, things were more rigorous. We learned to piece gates together to create arbitrary functions (binary adders, traffic light controllers, etc) using the infamous “Karnaugh Maps.” We then learned to optimize those designs, using the first logic optimization algorithms (like Berkeley’s own “espresso”).

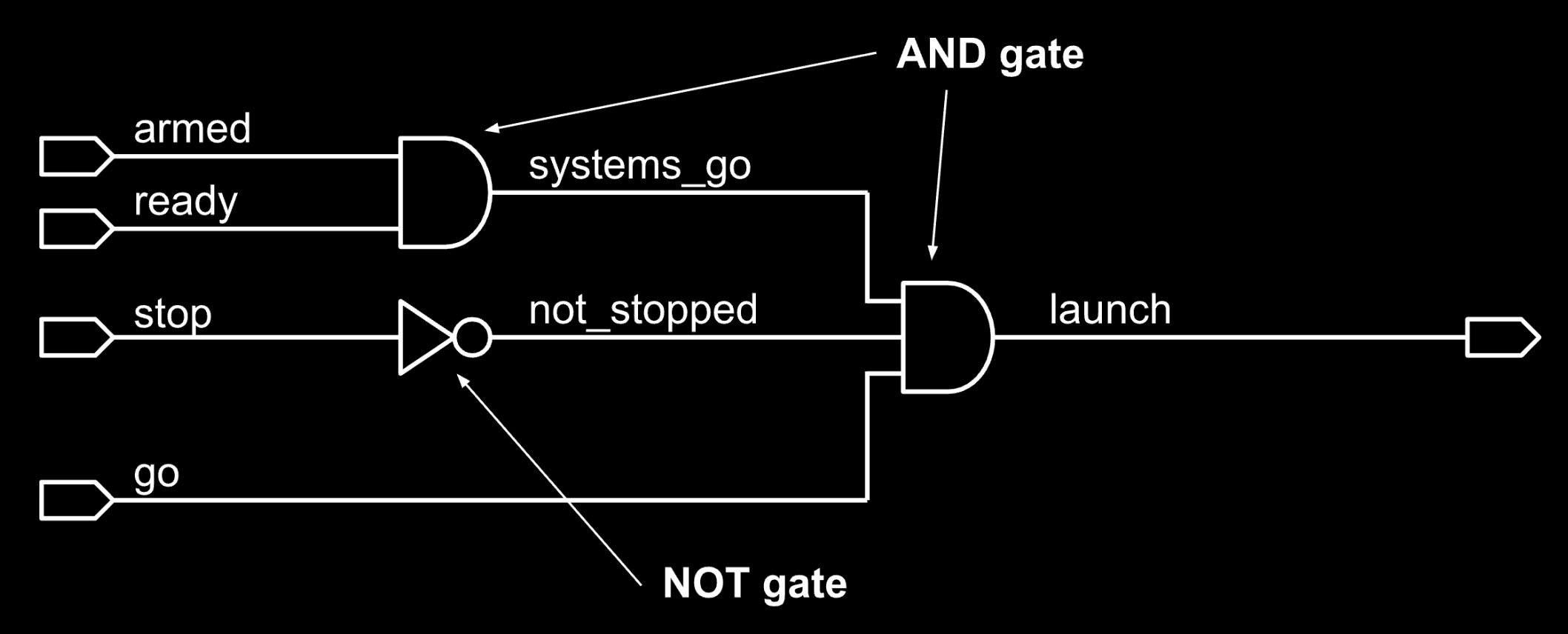

Imagine a circuit that creates a signal “launch” when the “go” is pressed AND we have NOT pressed “stop” AND we are “armed” AND “ready”. A gate-level design for this circuit, for many years, was entered schematically in a GUI like this:

It looks simple enough, but there were already a few choices made before arriving at the above. We could have combined the two AND gates to save some area. We could have pushed “stop” further downstream as a “final check” near the output. Then, after we’ve decided on a circuit like the above, we then have to choose from the dozens of power/performance/area variations of each gate.

To make all those choices, “timing constraints” are used to specify rules such as the time allowed between “go” causing “launch,” the maximum time to process “stop,” and the expected behavior if “stop” and “go” are received nearly simultaneously.

Hardware Design Languages (HDL) had been around since the mid-80s and had recently been incorporated into Berkeley’s curriculum. An HDL is a written language similar to a regular programming language (like C or Python), except that it adds concepts needed for logic design – parallel computation, strict event timing, and support for arbitrary data types. In my first computer architecture class, we used HDL to capture our gate-level designs – a handful of gates that added two bits was a “full adder,” then 32 of those full adders would make a “32-bit adder,” and so forth.

The above design written in an HDL, after choosing the exact variant of each gate, might look like this:

module launch_control ( input armed, ready, stop, go, output launch );

wire systems_go, not_stopped;

AND2_4X gate1 ( .IN1(armed), .IN1(ready), .OUT(systems_go) );

NOT_2X gate2 ( .IN(stop), .OUT(not_stopped) );

AND3_16X gate3 (.IN1(systems_go), .IN2(not_stopped), .IN3(go), .OUT(launch) );

endmoduleExpressing a design in “code” saves a lot of time that would otherwise be spent drawing wires in a GUI (trust me, I just did it both ways!). If you’re thinking “I find the picture easier to read,” you’re not wrong! It’s just that the code is easier to write, and incalculably easier to maintain. HDLs leverage all the infrastructure written for software code – editors, revision control systems, patch formats, etc. Each small team of students in that class built a 32-bit RISC CPU core: a fully pipelined design with ALU, register file, and caches.

Back in the early 1990s, HDLs began being used for testing designs. HDLs had all the abilities of a software language like C: reading and writing files, generating random inputs, calculating expected outputs, etc. On top of that, they added hardware-specific features needed to model highly parallel systems, like the above circuit scaled up millions of times larger.

HDLs were also being used to prototype hardware designs at a higher level. Most hardware blocks execute relatively simple algorithms, they just execute them very quickly. It’s usually easy to write the function required, at a high level. Our rocket launch controller could be prototyped as:

module launch_control ( input armed, ready, stop, go, output launch );

assign launch = (go && !stop && armed && ready);

endmoduleThat’s pretty easy! It doesn’t capture the exact organization and selection of gate flavors, but it does represent what the final version will do. This is very useful for writing test cases, building up and testing a larger system out of prototyped pieces, etc. In parallel, a designer with that high-level description, and the “timing constraints,” begins the gate-level design. Often, both versions are kept around – the high-level code will be much faster, easier to read, and faster to debug … while the gate design is what you’re physically building.

I clearly remember when I first heard the term “synthesis.” Towards the end of that Computer Architecture course, I went out for a drink with one of the Teaching Assistants. We were talking about how much nicer it was to build the design in code versus schematics, and how neat it had been to start with the “high level” code, and then translate it into gates once we’d seen the whole CPU working. He casually mentioned “You know, there’s a company that has a tool now which automatically translates the high-level code to gates… they’re called Synopsys and they call it ‘synthesis’ … it has some boring name like ‘design compiler’ … ”

My immediate reaction was shock, immediately followed by the realization that it made a lot of sense (“why didn’t I think of that? It’s really just a ‘compiler’…”). The next thought was the comfort of knowing that it would surely be a long time before machines would outperform humans at translating a “high-level” description into the perfect solution for the situation.

At that time, circa 1994, humans still wrote most performance-critical software code by hand, even though higher-level languages and compilers had been around for decades. You couldn’t get more “performance critical” than chip design, I assumed, so perhaps we’d be designing chips at gate level forever! Hardware in general, and chips in particular, are extremely expensive to build – no one would cut a few salaries and wind up with an inferior product, would they?

Shortly after that conversation in the bar, I began my first full-time job building a LAN+Modem interface card at 3Com corporation. This involved designing a PCB packed tight with chips, as well as designing the logic in a Field Programmable Gate Array (“FPGA”) which would arbitrate between the two functions of our card. An FPGA is, essentially, a programmable chip. It is more expensive and slower than a “real” chip, but it’s also an “off the shelf” part you just buy, versus spending large amounts of time and money fabricating custom chips. In many cases, an FPGA is exactly what you need. That was our situation at 3Com – we didn’t need many gates, and they didn’t need to be fast. We just needed a traffic cop that steered traffic through a three-way intersection.

I knew that I could create this design at the gate level, using the FPGA design tools to connect up gates in a GUI. Another designer was assigned to mentor. He came from the ASIC team down the hall, which had recently built the chip in the Etherlink III, a card that had started selling like crazy… the “dot-com” boom had begun.

The ASIC in that product had been built using Synopsys Design Compiler, a name that rang a bell. My mentor asked if I already knew Verilog – “they are teaching it in school now, it’s a way to write hardware in code instead of drawing gates” – and I replied that I’d built a 32-bit CPU that way. “Great,” he replied, adding “so you won’t need the training.” With that, he took my first few carefully drawn schematics and began to “re-write” them in Verilog. The code looked totally unfamiliar, but I was too embarrassed to mention that fact (it was another year before I realized that Berkeley had been teaching VHDL, a different language!)

I still wasn’t convinced that this tool could design better than me, and I didn’t like the unfamiliarity of the language. I was sorely tempted to abandon the HDL – I could draw the gates and be done with it. My mentor kept advising against it; I remember once in a moment of struggle, suggesting I might scrap the whole thing and spend the weekend recreating the design in schematics, and I remember his words (“I wouldn’t wish that on anyone. I’d rather play basketball”). The FPGA started becoming more complex, and a tighter fit, as the product evolved. It was becoming more important to be efficient – maybe our need for a compact, efficient design could give me a win against this infernal tool?!

I decided to build a critical state machine by hand. It was a few dozen gates, but it had to run exceptionally fast, to catch a fleeting event in a rare situation. It had to fit in one small, unused corner of the FPGA. I painstakingly crafted my circuit. My mentor, always up for a challenge, soon came back with something even better. Undaunted, I further polished my work, until I was sure it was impossible to do better. Again, the tool found a better answer. I began suspecting that the synthesized version might not work, as it was squeezing out unrecognizable gates. I wasted precious time checking it, hoping it had made a mistake, only to find that it was just fiendishly clever. While I admired it, another improved version arrived. I gave up checking its work and went back to optimizing my own. Yet another version landed.

I gave up, concluding: “This thing is going to take all our jobs…”

That thought, mixed with a little shame, burned in my mind. It was like my colleague had a magic wand, and could tell the Design Compiler “focus on making this design faster … now make it smaller …” and it would churn through thousands of possible solutions like magic. It felt like he wasn’t designing, he was telling it what to design. How could I compete with that?? Eventually, I began to calm down. After all, I didn’t kick myself when a pocket calculator could multiply faster than me, did I? You know what they say – if you can’t beat them: jump in with both feet, transfer to the ASIC team, and become a full-time Verilog coder.

It was interesting that Synopsys used Verilog as the input language for their tool. Verilog was not designed to be compiled into gates, and can express many things that the synthesis tool can’t translate into gates. One of the first things we learn is the “synthesizable subset” of the language, a line that is ever-shifting and contains a significant gray zone (“I guess it can do that, but you don’t want it to”). The expressiveness of Verilog meant that Synopsys (and later other companies) could slowly expand that “synthesizable subset”. Verilog evolved into the more powerful SystemVerilog, following the model of C evolving into C++.

Overall, I would estimate that synthesis increased productivity around 1000%. Let’s consider for a moment how much chip design has changed over that time period, and how complex things have become.

Chip design teams used to be perhaps a dozen people, and are now typically around a hundred (the range is wide – many are still done with core teams around a dozen people, while some take hundreds). Back then, a chip cost $50K to fabricate, that number is now around $20M. That first FPGA I designed was 3K gates, and my first ASIC was 50K gates – in contrast, a modern chip today has a gate-count in the billions.

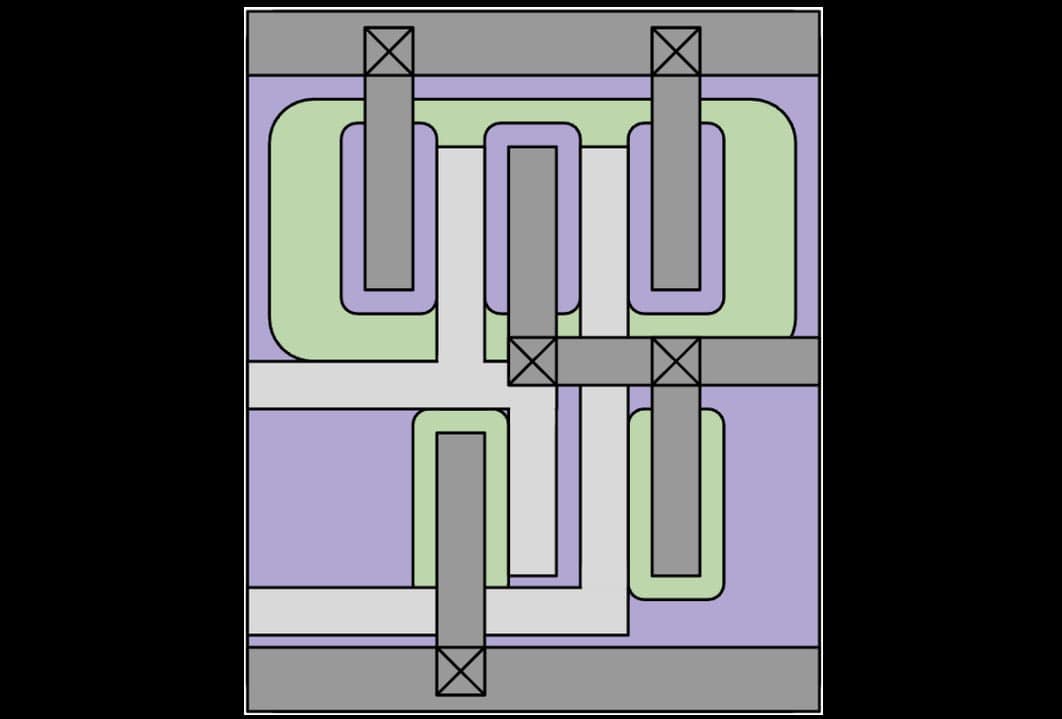

We should think of a chip as a machine. The gears in this modern machine are called “gates”:

Above is a simple gate with four transistors. On paper, the wires appear ~5mm wide, in different colors for different “layers” – a modern chip would have ~15 such layers.

These wires are what we are talking about when we say that a new phone has a “5nm chip.” Which tells us that our picture is drawn at 1,000,000:1 scale (5mm : 5nm). A typical CPU chip will be around 12x12mm, so drawn at the same scale, it would be a 12x12km field of gates.

To get a sense for how fast these chips can crunch numbers, consider this. In 2025, our phones typically have 6 CPU cores, each executing ~4 instructions per 2.5GHz clock, which means they’re churning through 60 instructions per nanosecond. That is a billionth of a second, less time than it takes photons moving at the speed of light to travel from our phone to our face… A chip is a fast machine.

Looking at chips today, it’s hard to imagine how they could have been designed without the revolutionary technology of logic synthesis. To be honest, synthesis did take away most jobs involving schematic-level design jobs: it did them better and faster, enabling us to focus on designing larger, more feature-rich chips. In return, it gave us a vibrant industry that today employs 338,000 people, as well as supporting 300 downstream economic sectors, employing over 26 million U.S. workers [SIA].

Despite impressive growth and innovation, the semiconductor industry is facing another inflection point. Design costs are too high, and still rising. Schedules are too long, and still increasing. Job market data, meanwhile, is predicting a massive shortfall in the number of engineers coming into the profession. Surprisingly, there is more concern about the number of chip designers than AI data scientists.

There have been many attempts to find the next leap forward in chip design productivity.

- Register-Tansfer Level (RTL) design is dominated by SystemVerilog, but there is also VHDL, as well as a handful of smaller “modern” languages, that all operate fundamentally at “the same level” – i.e. one could write a program to translate between them fairly easily (unlike translating RTL into gates).

- High Level Synthesis tools, in contrast, take a variety of input languages (C/C++, Python, etc) and “unroll” the code, typically translating into SystemVerilog. This methodology has its adherents and its successes, but the reality is that most major chips are not designed this way. Fundamentally, this approach seems to enable faster design cycles, in exchange for leaving performance on the table. In competitive markets, we don’t often choose to leave performance on the table.

It is now time to move to a higher level of abstraction in design, a new ‘synthesis’ moment if you will. Recent advancements in AI are inviting us to consider if natural language could be our next “higher-level language.” Like last time, this will quickly go from something casually mentioned by a colleague, to staring us in the face with results we have trouble believing.

Thinking back to the early synthesis days, I can now confidently answer: no, it won’t.

Without synthesis tools, it’s true that we would need much larger teams to create today's chips, but it’s also true that the chip market would be much smaller – fewer products, at higher cost, and lower performance. The vast majority of chip design jobs would be monotonous, repetitive, and lower paying. A small group of people would do the high-level design work, then a large group would finish the job – “coloring between the lines,” like teams of animators working on a big Hollywood action movie.

Would chip designers have preferred to be crafting gate-level designs to this day, versus synthesizing the amazing chips that defined the 21st century? No, they wouldn’t.

Synthesis tools propelled an industry that has multiplied many times in revenue since 1990. For similar reasons, I expect that AI-enhanced chip design will increase the number of jobs and make them more interesting. Soon, more chips will be designed, each more precisely targeted to its application, with more features, all at lower cost and power. AI models will enable us to build more of the chips we want, instead of just the chips we can.